Between more substantial posts, I am constantly updating my Twitter stream.

(Twitter updates are also available in my sidebar)

Expect plenty of pithy and unsubstantiated entries.

Between more substantial posts, I am constantly updating my Twitter stream.

(Twitter updates are also available in my sidebar)

Expect plenty of pithy and unsubstantiated entries.

I just got back from a wonderful (albeit short) break to visit Australia. Always great to see family and friends back home.

No incredibly insightful first post for the new year sadly, I’m still enjoying the lazy feeling from a relaxing (warm!) Christmas.

So instead, here are some random Wikipedia links, inspired by visits to the beaches of Coolangatta:

A siphonophora is a colony of various organisms that then appear to be a single organism.

Siphonophores are especially scientifically interesting because they are composed of medusoid and polypoid zooids that are morphologically and functionally specialized. Each zooid is an individual, but their integration with each other is so strong that the colony attains the character of one large organism. Indeed, most of the zooids are so specialized that they lack the ability to survive on their own. Siphonophorae thus exist at the boundary between colonial and complex multicellular organisms. Also, because multicellular organisms have cells which, like zooids, are specialized and interdependent, siphonophores may provide clues regarding their evolution.

(I spotted several bluebottles on Bilinga beach)

The Portuguese Man O’ War has an air bladder (known as the pneumatophore or sail) that allows it to float on the surface of the ocean. This sail is translucent and tinged blue, purple or mauve. It may be 9 to 30 centimetres long and may extend as much as 15 centimetres above the water. The Portuguese Man O’ War secretes gas into its sail that is approximately the same in composition as the atmosphere, but may build up a high concentration of carbon dioxide (up to 90%). The sail must stay wet to ensure survival and every so often the Portuguese Man O’ War may roll slightly to wet the surface of the sail. To escape a surface attack, the sail can be deflated allowing the Man O’ War to briefly submerge.

Below the main body dangle long tentacles, which occasionally reach 50 meters (165 ft) in length below the surface, although one metre (three feet) is the average. The long tentacles “fish” continuously through the water and each tentacle bears stinging venom-filled nematocysts (coiled thread-like structures) which sting and kill small sea creatures such as small fish and shrimp. Muscles in each tentacle then contract and drag prey into range of the digestive polyps, the gastrozooids, another type of polyp that surrounds and digest the food by secreting a full range of enzymes that variously break down proteins, carbohydrates and fats. Gonozooids are responsible for reproduction.

Which then led me to the fascinating article on blanket octopi:

An unusual defense mechanism in the species has evolved: blanket octopuses are immune to the poisonous Portuguese man o’ war, whose tentacles the female rips off and uses later for defensive purposes. Also, unlike many other octopuses, the blanket octopus does not use ink to intimidate potential predators, but instead unfurls a large net-like membrane which then spreads out and billows in the water like a cape. This greatly increases the octopus’s apparent size, and is what gives the animal its name.

Yikes.

A two-fer today:

As early as the 1940s it was reported that female wasps of this species sting a roach twice, delivering venom. A 2003 study proved using radioactive labeling that the wasp stings precisely into specific ganglia of the roach. She delivers an initial sting to a thoracic ganglion and injects venom to mildly and reversibly paralyze the front legs of the insect. This facilitates the second venomous sting at a carefully chosen spot in the roach’s head ganglia (brain), in the section that controls the escape reflex. As a result of this sting, the roach will first groom extensively, and then become sluggish and fail to show normal escape responses.

The wasp proceeds to chew off half of each of the roach’s antennae. The wasp, which is too small to carry the roach, then leads the victim to the wasp’s burrow, by pulling one of the roach’s antennae in a manner similar to a leash. Once they reach the burrow, the wasp lays a white egg, about 2 mm long, on the roach’s abdomen. It then exits and proceeds to fill in the burrow entrance with pebbles, more to keep other predators out than to keep the roach in.

With its escape reflex disabled, the stung roach will simply rest in the burrow as the wasp’s egg hatches after about three days. The hatched larva lives and feeds for 4-5 days on the roach, then chews its way into its abdomen and proceeds to live as an endoparasitoid. Over a period of eight days, the wasp larva consumes the roach’s internal organs in an order which guarantees that the roach will stay alive, at least until the larva enters the pupal stage and forms a cocoon inside the roach’s body. Eventually the fully-grown wasp emerges from the roach’s body to begin its adult life.

In part one of Seligman and Steve Maier’s experiment, three groups of dogs were placed in harnesses. Group One dogs were simply put in the harnesses for a period of time and later released. Groups Two and Three consisted of “yoked pairs.” A dog in Group 2 would be intentionally subjected to pain by being given electric shocks, which the dog could end by pressing a lever. A Group 3 dog was wired in parallel with a Group 2 dog, receiving shocks of identical intensity and duration, but his lever didn’t stop the electric shocks. To a dog in Group 3, it seemed that the shock ended at random, because it was his paired dog in Group 2 that was causing it to stop. For Group 3 dogs, the shock was apparently “inescapable.” Group 1 and Group 2 dogs quickly recovered from the experience, but Group 3 dogs learned to be helpless, and exhibited symptoms similar to chronic clinical depression.

In part two of the Seligman and Maier experiment, these three groups of dogs were tested in a shuttle-box apparatus, in which the dogs could escape electric shocks by jumping over a low partition. For the most part, the Group 3 dogs, who had previously “learned” that nothing they did had any effect on the shocks, simply lay down passively and whined. Even though they could have easily escaped the shocks, the dogs didn’t try.

There was a great Schneier post about why he is so ‘into’ security, and how his mindset differs from so many other people. I was thinking tonight about why I’m so passionate about user experience and how to improve its general approach. I think what drives me boils down to the following:

Obviously it’s impossible to know exactly what other people think, so usually I ask them. What did you think about that? Why did you think that? Internally I create a mental model on how people with different views might interpret things. When I use something I can’t but help imagine how my grandmother might use it. Would my Dad know what to do next? How about my best mates? Not only with design, but this extends to even just being in a group conversation. As people are relating stories I’m wondering “how will other people here interpret that?”. I find I can’t but help notice when there’s a gap there, and I often find myself interrupting two people who obviously don’t share the same understanding, “oh by the way John, I think what Fred really meant is this…”.

My wife hates this. She noticed this started just after I began my PhD. I’ve always been critical of my personal devices and sites I use, but after starting a PhD in human computer interaction I became hypercritical. Used to be if I got stuck, I’d blame myself and look up the manual. I’d like to think that I’m fairly savvy, and most times I find myself stuck, it’s usually a usability problem. On a daily basis, my wife deals with a lot of my frustration. The worst two designs for me at the moment for this are the Playstation 3 system UI (what were they thinking? The company that brought the simplicity and joy of the PSP interface took it and just broke it) and the new Google search interface (they crowding my results with multiple suggestions that I search for what now? And what are all these new buttons everywhere? Way too much clutter).

As a product manager I understand that it is necessary to balance business requirements with usability. However it is not good enough to say “well, this gives us x revenue, so even though it upsets the users, let’s keep it in there”. What about the lost y revenue from the people who stop coming to your site? By focussing on user experience above all else you give people a product that they keep coming back to. Lost revenue streams can usually be replaced. Obnoxious ads aren’t the be all and end all of making money on the internet. Creating something that makes people tell their friends about how great it is (so long as you have a business plan on how to monetize the traffic) is the best possible thing you can do. Companies such as Apple and Google show this again and again. I still believe user experience (in balance) with business requirements is key.

Every time I use a new product I always like contemplating why is its design the way it is. Why did the Peek email device forgo all other online activity? Could its interface be better? Why does the iPhone not support MMS and video? Could their touch interface be done better? I can never be satisfied when using a product, as I’m always asking why.

Getting used to a particular way of doing things is great, as it reduces cognitive load. However it’s often not the best way of doing it, nor the most intuitive. If you can find a balance of both, everyone wins. New paradigms for interaction should be supported, although I’m always happy to let someone else push them on their own designs first, and make them a success first so that when I employ them people are used to them.

Above everything else, assuming your users are “dumb”, and that you should cater for the “lowest common denominator” is a bad idea. Why? Because they’re not dumb. They’re not the lowest common denominator. Different people have different needs, different mental models, and different approaches to completing a task. Simplifying your design approach to thinking “our users are dumb, let’s make it easy for them” is not usability, and a trap for unwary players. People are smarter than you think, and designers that find the best ways to make use of tacit skills in their users are those that succeed.

Overall I think usability is more a state of mind than a set of skills. But it’s a hard state ofmind to become accustomed to. I wrote my PhD about how to better integrate engineers to the design process and make them aware of usability concerns, and my answer was it’s hard (and “it depends”). But being cognizant of the difficulties users face, and respecting them and trying to anticipate these difficulties (feel free to just talk to them!) will make your design not just better but more successful.

This is great.

I’ve been using Amazon Turk for a variety of different projects and I love it. It’s fantastic to actually be able to put a face to the people who do such an amazing job in contributing.

Thanks Waxy.

I’ve really enjoyed the following blogs of late:

Tepom.com – amateur personal finance advice from Scott Bliss. Usually thoughtful and interesting posts for everyday financial issues.

Cake Wrecks – professional cakes gone wrong. Oddly addictive. I’m always surprised at the niches blogs can fill (see also Passive Aggressive Notes).

Smashing Magazine – superb site for all aspects of web design, with a bias towards graphic design (which I need a lot of help with.

Futuristic Play – Andrew Chen’s blog which I read for its excellent posts on viral marketing, product design and user experience.

FiveThirtyEight – Nate Silver’s incredibly addictive election predictor site. Sadly I probably won’t be back for a few more years now…

I’m a big fan of user review sites. I’ve been using TripAdvisor since at least 2002 to help plan my journeys, and Yelp is my new favourite site since moving to the US.

Crowdsourcing information is usually a pretty good way of doing things. There’s been plenty of research which has shown that if you get a crowd of people together and have them, for instance, guess the total number of jelly beans in a jar, the average guess will be pretty close to the real number. Translating this to something usable in everyday life, I’ve seen people have a lot of success with Amazon’s Mechanical Turk for aiding research, since you can use the sheer weight of numbers to smooth the data.

This is great for something tangible and objective. However, as soon as you start throwing subjective data into the mix, results get a little skewed.

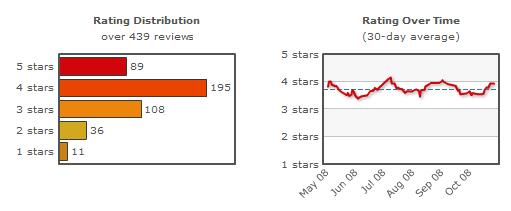

In particular I’ve noticed lately I can’t quite trust online reviews (from a wide variety of users) the way I used to. I’m not sure why things have gotten so skewed — perhaps I’m more discerning now, or maybe there are more outliers. Either way, I haven’t trusted the average rating on sites like Amazon, Yelp and TripAdvisor for a couple of years now. Instead I read a sample of reviews and then go straight to the upper and lower bounds and try to get a sense of why people are voting in a particular fashion. Is the product/place being reviewed being unfairly penalized or rewarded? (for example, a hotel might get a lot of 1 star reviews for high parking fees, but are great otherwise. A restaurant might get a lot of 5 star reviews because they’re cheap and have a nice ambiance, even though the food stinks.)

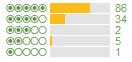

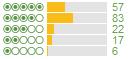

One method I’ve found for making a better decision is to look at the shape of the score curve. For example, here are the scores for the top 5 TripAdvisor hotels in San Francisco at the moment, order by TripAdvisor by their average score:

1

1

2

2

3

3

4

4

5

5

There aren’t any that really stick out there as being obviously different, but you can see that the fourth one gets a far higher ratio of 5 star ratings to 4 star ratings than the others. These differences become more pronounced the further down the overall list of hotels you go. Hotel A is rated as a better hotel than Hotel B which is ranked (according to its average) after it:

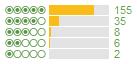

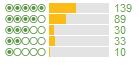

Hotel A:

Hotel B:

Whenever I see a curve difference like this though, I always go for the latter when booking. Since I changed tactics, I have been having great hotel experiences. To give this a quantifiable score to compare, I tried out the following formula:

If ((x star votes) – (x-1 star votes)) > 0 then

y = x

else

y = 5 – (x – 1)

(y*(5votes – 4votes) + y*(4votes – 3votes) + y*(3votes – 2votes) + y*(2votes – 1votes) + 1votes) / total votes

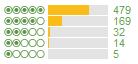

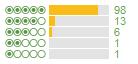

Using a formula such as this the new overall scores become:

(260 + 96 – 9 + 8 + 1) / 128 = 2.78125

(600 + 108 + 6 + 8 + 2) / 206 = 3.51456

(1550 + 548 + 54 + 18 + 5) / 699 = 3.11159

(425 + 28 + 15 + 0 + 1) / 119 = 3.94117

(1260 + 948 + 60 + 16 + 13) / 883 = 2.60136

Which as you can see results in a much better looking ranking for the curves:

4

4

2

2

3

3

1

1

5

5

If we apply the formula to the hotels A and B, we see the difference becomes more pronounced:

(-26 + 244 + 15 + 22 + 6) / 185 = 1.41081

(250 + 236 – 9 + 46 + 10) / 301 = 1.77076

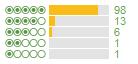

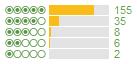

Introducing a new overall score would help people pick better hotels and for the hotel owners to strive for higher ratings. I’m also a big fan of the trending data that Yelp has added recently, using Patxi’s as an example:

Coming from someone who wrote his thesis on qualitative user feedback, this has been really interesting for me to look at how you can properly interpret large amounts of quantitative data involving subjective scores.

Update November 7 2008: Thanks to Eric Liu for pointing out some weighting issues depending on vote numbers. We’re brainstorming some new algorithms to account for these situations.

In the meantime, anyone from the myriad of Netflix people who have stopped by, feel free to contact me! tim@<this domain>.

I’m completely addicted now. In-n-out. Krispy Kreme. Sibby’s Cupcakes. Fred steaks. And tonight’s indulgence? Chicago style deep-dish pizza from Patxi’s.

We ordered a 12″, which weighs nearly 2kg (it is 2″ deep). It takes 30 minutes to bake (compared to the usual 6 minutes or so for a thin crust pizza). The pizza actually consists of two layers. Ingredients are placed on a bottom layer of dough, another layer is added on top of that, and then the sauce is placed on top. We actually ordered the “low fat” version originally, but the kindly gentleman who took our order swiftly talked us out of that.

Delicious.

I know that ‘freemium‘ is a popular business model at the moment, but do everyday web users really notice this when they’re using a site?

Personally I always assume a site is free and am surprised/annoyed when it isn’t, unless it offers something very compelling or a freemium model (the best, in my opinion, is Flickr). However a lot of sites put “free” in large type (including Trovix!) on their landing page. The only rationales I can think of (beyond the rare case that most other examples of your product are paid) split into two camps:

1) If you require an account to use the site, some people might think they will be asked for credit card details during the sign up. But how prevalent is this concern? It’s never come up in any focus groups or user testing. When directly asked, the people in my study groups were unanimous in the assumption it would be free.

2) It’s used as an attention grabbing bit of text that is intended to make the user feel like they are getting something (which should be a paid service) for free. This just feels tacky, and depending on how it is done, can detract from the brand.

I was struck by Mint’s use of this tonight, which prompted this post:

Perhaps it is a cultural thing — I have seen many products that label themselves as ‘free’ in a scammy way. Regardless, unless everyone else is charging (or even if they are, like when Yahoo and Google introduced real time stock prices), it seems an unnecessary feature to draw attention to.

Sometimes when I’m searching for a particular product or service, the most frustrating thing is the way Google brings up mostly things to purchase. For example, recently I knew that a direct flight between Sydney and New York used to exist, and wanted to find out some information about it.

These are the top results:

To cut through this I like to use Google Blog Search, where I can usually find some helpful blogger who is talking about the concept I’m interested in. However sometimes, like this occasion where I’m essentially searching for old news, Google Blog Search fails to remove the cruft, as it tends to give an inordinate amount of weight to more recent posts:

European and Asian Stocks Fall Sharply

| 8 hours ago by Jack Kelly Total, the French oil company, slipped 4.5 percent on heavy volume, as oil futures for November delivery on the New York Mercantile Exchange fell as much as $3.92 to $89.96 a barrel on the New York Mercantile Exchange. … CompliancEX – http://compliancex.typepad.com/compliancex/ [ More results from CompliancEX ] |

Stocks Fall Sharply on Credit Concerns

| 5 hours ago by chrisy58 New York Times. http://www.nytimes.com/2008/10/07/business/07markets.html?_r=1&hp&oref=slogin. October 6, 2008. The selling on Wall Street began at the opening bell on Monday and only intensified as the morning went on. … Chrisy58’s Weblog – http://chrisy58.wordpress.com/ |

Credit Crisis Drives Stocks Down Sharply2 hours ago by Ricardo Valenzuela

Crude oil was trading just over $89 a barrel in New York after 2 pm. President Bush made an unscheduled stop on Monday morning to speak about the crisis with owners of small businesses in San Antonio — and the television cameras that …

INTERMEX POWER – http://intermexfreemarket.blogspot.com/

As such, one of my most often used Google sites is Google Groups — its long-term archiving and generally high quality results combined with a more traditional Google search algorithm mean I can usually find very specific discussions I’m looking for. For example, in the past I’ve used it for finding out people’s experience with getting a green card, sourcing older tech support, or travel experiences. Lately though, Google Groups has been completely broken. Here are the results for my search:

Direct Flights Nassau To New York Group: obs27rruhuvtene

| auwwb…@dipnoi.az.pl obs27rruhuvtene *Direct Flights Nassau To New York* <http://sebj2o8a3l.airlinetickets24.info/?i=30091017&data=airline+ticket> Direct Flights Nassau To New York … Flights New York Dublin <http://groups.google. com/group/obs27rruhuvtene/web/flights–new–york-dublin>, Flights Sydney To New York … Sep 16 by auwwb…@dipnoi.az.pl |

Direct Flights New York Group: skfea3bmsss

| mvzdilis…@anosmia.az.pl skfea3bmsss *Direct Flights New York* <http://0vcz7v. airlinetickets24.info/?i=30091020&data=airline+ticket> Direct Flights New York … Larnaca cheap flights, Wholesale cheap airline tickets, Cheap flights from cardiff to majorca, Airline tickets bid, Cheap flights from brisbane to sydney, … Sep 19 by mvzdilis…@anosmia.az.pl |

Cheap Flights From Sydney To Aukland Group: Data Access rodeo1a

| Amsterdam 59.00 New York 179.00 Bangkok 389.00 Caribbean 299.00 Sydney 639.00 Flights Sydney Auckland Compare and book Cheap FlightFlights Sydney Auckland … Sydney.Cheap Flights to Sydney Australia from UK Airports TRAVELBAGTravelbag provides cheap flights to Sydney flights from London direct flights Sydney.to … Apr 24 by susannabatchelorb8b…@gmail.com |

It seems like Google has given access to creating groups to anyone with a Google account. This, combined with the long broken Google CAPTCHA means it is getting completely clogged up. I went 250 pages in (which in itself surprised me, as you can only go 100 pages using normal Google search) and there it was still.

So I tried a few other searches I’ve done lately.

Roomba battery? Broken.

Olympic games? Partly broken.

Travel to Ghent? Broken.

This one probably isn’t fair.

Anyway, there certainly is a lot of crap in there.

I’ve been noticing how useless Groups has been for several months now and assumed Google would do the usual thing and fix it up pretty fast. I just checked and the last mention of Groups on the official Google blog was in January 2007 when this feature was taken out of beta, meaning it’s a problem wasn’t fixed in the 18 months it was in beta.

I heard one of the reasons why PicasaWeb limits a user’s space (as opposed to Flickr’s unlimited paid service, a sore point I won’t discuss now) is to “prevent spam” — if they can choose to fundamentally cripple a product in their fight against spam, why not fix one that is broken by spam?

Update: Apparently even more spam issues at hand with Google Groups.